From Chaos to Clarity: Why Your Enterprise AI Strategy Needs Agentic Architecture, Not Another Chatbot

The Hidden Cost of AI Theater: When "Intelligent" Systems Can't Remember Yesterday's Conversation

The Enterprise AI Crisis: Why "Intelligent" Systems Fail at Simple Tasks

Walk into any enterprise today, and you'll hear the same refrain: "We've deployed AI."

Dig deeper, and you'll discover a troubling reality. These "AI systems" can't complete a simple multi-step task without human intervention. Ask them to check compliance for three vehicles and generate a cost report? They'll hallucinate data, contradict themselves mid-response, or simply give up when an API call fails.

This isn't a model problem. GPT-4, Claude, and other large language models are remarkably capable. The issue runs deeper: we're building the wrong architecture.

We're asking a statistical reasoning engine to also be a database, an API orchestrator, a workflow manager, and an execution engine—simultaneously. It's architectural malpractice, and it's costing enterprises millions in failed pilots and abandoned implementations.

Consider a real-world scenario that plays out daily across organizations: A fleet manager needs to verify compliance for vehicles entering London's Ultra Low Emission Zone (ULEZ), retrieve payment history for non-compliant vehicles, and generate a cost optimization report. Simple enough, right?

With traditional chatbot architecture: The system receives the request, attempts to process all three vehicles at once, invents plausible-sounding but completely fabricated charge amounts, loses context halfway through, and produces a response that's confidently wrong. Ask the same question an hour later? Different answer. The user has no way to verify what's real and what's hallucination.

The actual workflow needed:

- Query vehicle database for first vehicle → structured data

- Query vehicle database for second vehicle → structured data

- Query vehicle database for third vehicle → structured data

- Identify non-compliant vehicles from results

- Fetch payment history for each non-compliant vehicle → structured data

- Analyze historical patterns and costs

- Generate actionable recommendations based on real data

Traditional chatbots can't reliably execute this sequence because they conflate reasoning with execution. They're trying to imagine what the database would return rather than actually querying it.

The result? A system that seems intelligent but fails at the fundamental task of coordinating deterministic operations.

The Root Cause: Architecture, Not Intelligence

Here's what's actually broken in most enterprise AI deployments:

Problem 1: Hallucination Masquerading as Confidence

LLMs are trained to complete patterns, not to admit uncertainty. When asked about vehicle compliance charges, a chatbot doesn't say "I need to check the database."

Instead, it generates plausible-sounding numbers: "ABC123 faces £12.50 ULEZ charge plus £15 Congestion Charge." Sounds authoritative. Might be completely wrong.

Problem 2: No Conditional Logic

Real workflows have dependencies. "If vehicle is non-compliant, then fetch payment history" is trivial in code, impossible in a prompt.

You can't reliably tell an LLM "only execute step 5 if step 4 returned specific data." It will either execute everything or get confused about what you meant.

Problem 3: Context Window Collapse

Conversation histories grow. After 20 exchanges, the model starts losing track of earlier statements. It contradicts itself. Forgets user preferences. Returns different answers to identical questions.

This isn't a bug—it's a fundamental limitation of trying to maintain state in conversational context.

Problem 4: No Error Recovery

What happens when an API call fails? A human would try an alternative approach, check if the service is down, or gracefully handle the error.

A chatbot? It either halts entirely or, worse, fabricates a response pretending the call succeeded.

The pattern emerges: We're using sophisticated reasoning engines as if they were deterministic execution systems. They're not.

They excel at analysis and decision-making but fail catastrophically at the orchestration and execution that production systems demand.

The Solution: Agentic Architecture as Strategic Foundation

Here's the critical insight that separates successful AI implementations from expensive failures: Agentic AI is not a chatbot with plugins. It's a reasoning engine that delegates execution to specialized, deterministic components.

This distinction matters enormously. Chatbots with "tool use" still ask the LLM to juggle reasoning, planning, execution monitoring, and error handling simultaneously. Agentic systems separate these concerns into distinct architectural layers, each optimized for its specific purpose.

Understanding the Three-Tier Architecture

Real agentic systems implement a clear separation of responsibilities:

Tier 1: Intent Analysis Layer (The Brain)

- Receives natural language input

- Extracts structured entities (vehicle IDs, dates, user context)

- Classifies query complexity (simple lookup vs. multi-step workflow)

- Generates execution plans with conditional logic

- Returns structured JSON, not conversational text

This layer uses the LLM for what it does best: understanding messy human intent and translating it into structured plans. It doesn't try to execute anything.

Tier 2: Execution Engine (The Coordinator)

- Receives structured execution plans

- Orchestrates tool calls in sequence

- Evaluates conditional logic based on actual results

- Implements error handling and retries

- Aggregates results for synthesis

This layer is deterministic code, not probabilistic reasoning. It understands "if result.compliance === 'non-compliant' then call get_payment_history()" without any ambiguity.

Tier 3: Tool Ecosystem (The Executors)

- Specialized, focused operations (database queries, API calls, computations)

- Deterministic inputs and outputs

- Proper error handling (never throw, always return structured responses)

- Single responsibility per tool

These aren't wrapped in LLM magic. They're regular functions, properly engineered, that return predictable results.

How This Architecture Eliminates Core Problems

Let's trace how our fleet compliance scenario actually executes:

User Query: "Check compliance for AMS1, ABC123, DM70ABC and optimize costs"

→ TIER 1 (Intent Analysis):

Structured Plan Generated:

{

"intent": "Fleet compliance check with cost optimization",

"complexity": "multi_step",

"plan": [

{"step": 1, "tool": "check_vehicle", "params": {"vrm": "AMS1"}},

{"step": 2, "tool": "check_vehicle", "params": {"vrm": "ABC123"}},

{"step": 3, "tool": "check_vehicle", "params": {"vrm": "DM70ABC"}},

{"step": 4, "tool": "get_payment_history",

"params": {"vrm": "ABC123"},

"conditional": {"if": "step_2.compliant === false"}},

{"step": 5, "tool": "get_payment_history",

"params": {"vrm": "DM70ABC"},

"conditional": {"if": "step_3.compliant === false"}}

]

}

→ TIER 2 (Execution Engine):

Execute step 1 → Returns: {compliant: true, zones: ["ULEZ", "LEZ"]}

Execute step 2 → Returns: {compliant: false, violations: ["ULEZ"]}

Execute step 3 → Returns: {compliant: false, violations: ["ULEZ"]}

Evaluate conditionals:

- Step 4 condition met (step_2.compliant === false)

- Step 5 condition met (step_3.compliant === false)

Execute step 4 → Returns: {total_charges: £275, frequency: "daily"}

Execute step 5 → Returns: {total_charges: £162.50, frequency: "weekly"}

→ TIER 1 (Response Synthesis):

Takes raw results + original intent

Generates: "Your fleet analysis shows ABC123 is costing £275 daily in

ULEZ charges (£100,375 annually). Consider vehicle upgrade or route

optimization. DM70ABC faces £162.50 weekly (£8,450 annually). Total

annual exposure: £108,825."

Notice what happened: The LLM never touched the data. It planned the workflow, then synthesized the results into clear recommendations. The execution engine handled all the orchestration deterministically. The tools returned real, verifiable data.

Model Context Protocol: The Universal Standard for AI Tool Integration

Understanding agentic architecture reveals another problem: how do tools actually connect? Every enterprise has dozens of data sources—databases, APIs, SaaS platforms, internal services. Building custom integrations for each one creates maintenance nightmares and vendor lock-in.

Enter the Model Context Protocol (MCP), an open standard that functions as the "USB-C port for AI agents." Instead of building N×M integrations (N agents × M data sources), you build M MCP servers that any agent can use.

The Business Case for MCP Adoption

The Pre-MCP Nightmare:

Your team builds custom connectors for Claude to access your database. Different connectors for GPT-4. Different authentication for each. When you upgrade Claude? All connectors break. When you add a new agent? Rebuild everything. This doesn't scale.

The MCP Solution:

Expose your vehicle database as an MCP server. Define standard tools: check_vehicle, get_payment_history, process_payment. Any MCP-compatible agent can now use these tools. The backend can evolve independently—you're programming to an interface, not an implementation.

MCP Architecture: Standardized Client-Server Communication

┌─────────────────────────────────────────────────────────┐

│ AI Agent (MCP Client) │

│ • Claude, GPT-4, or custom agent │

│ • Generates structured tool requests │

│ • Receives structured tool responses │

└─────────────────────────────────────────────────────────┘

↕ MCP Protocol

┌─────────────────────────────────────────────────────────┐

│ MCP Server (Tool Provider) │

│ • Exposes standardized tool interface │

│ • Handles authentication & authorization │

│ • Manages connection to backend systems │

│ • Returns typed, validated responses │

└─────────────────────────────────────────────────────────┘

↕ Internal APIs

┌─────────────────────────────────────────────────────────┐

│ Backend Systems (Your Data) │

│ • Databases, APIs, SaaS platforms │

│ • Unchanged by MCP layer │

└─────────────────────────────────────────────────────────┘

This separation buys you flexibility. The MCP server acts as a translation layer—smart enough to handle the messy details of your backend, simple enough that any agent can use it.

Practical Use Case: Potential Implementation for transportation using MCP

How can we put the theory into practice in a real-world complex situation: the London Transport MCP Server managing vehicle compliance, payments, and disputes for London's emission zones.

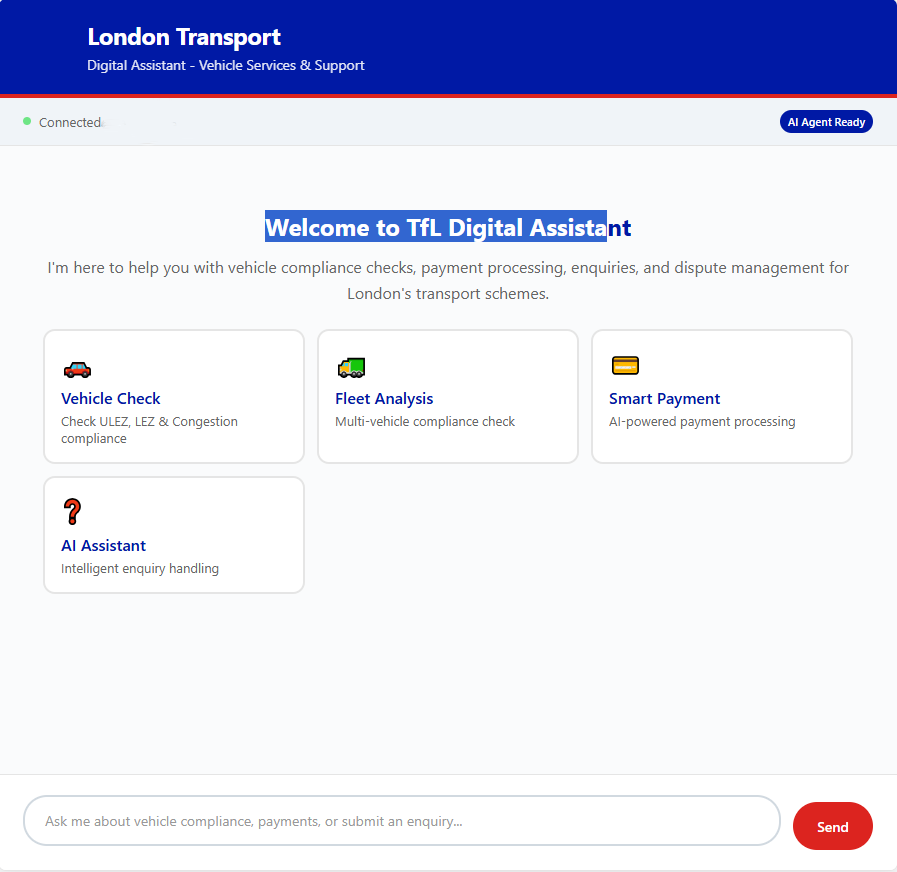

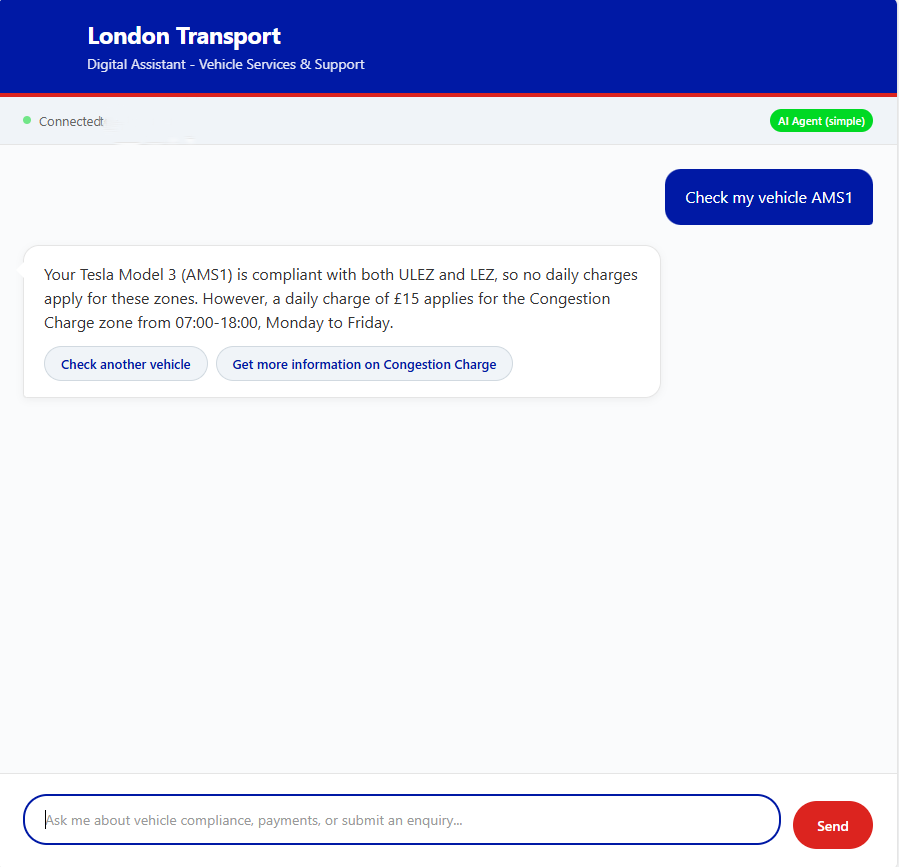

Chatbot Home Interface

The clean, intuitive interface of the London Transport MCP Server - users can query vehicle compliance and payments in natural language

Chatbot Home Interface

The clean, intuitive interface of the London Transport MCP Server - users can query vehicle compliance and payments in natural language

The Business Challenge

London operates multiple charging schemes with different rules, rates, and exceptions:

- Ultra Low Emission Zone (ULEZ): £12.50 daily for non-compliant vehicles

- Congestion Charge: £15 daily in central London

- Low Emission Zone (LEZ): Commercial vehicle restrictions

Each has different operating hours, geographical boundaries, vehicle exemptions, and penalty structures. The payment system must handle:

- Real-time compliance verification across multiple databases

- Payment processing for different schemes and date ranges

- Historical queries with complex filtering

- Dispute management with evidence submission

- Proactive alerts for zone entry

Traditional approaches failed because they tried to encode all this business logic in prompts. The agent would get confused about which charges applied when, hallucinate exemption rules, or lose track of payment status across conversation turns.

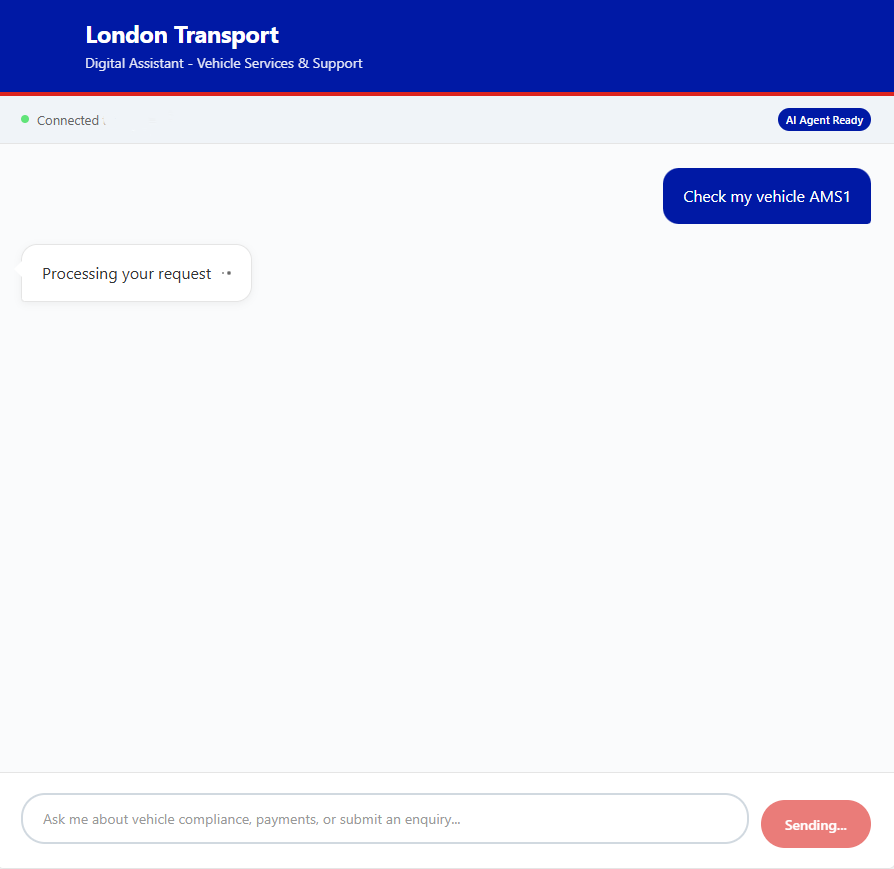

Processing

The system processing a multi-step vehicle compliance query - notice the structured approach to handling complex requests

Processing

The system processing a multi-step vehicle compliance query - notice the structured approach to handling complex requests

The Agentic Architecture Solution

The London Transport MCP Server implements a clean three-tier architecture:

Tier 1: Natural Language Understanding

javascript1User: "I'm driving ABC123 into London next Tuesday, what will it cost?" 2 3→ Intent Analysis extracts: 4 - Vehicle: ABC123 5 - Date: Next Tuesday (2026-02-03) 6 - Intent: Cost projection 7 - Action: Check compliance + calculate charges 8 9→ Generates plan: 10 Step 1: check_vehicle(ABC123) → get compliance status 11 Step 2: calculate_charges(ABC123, "2026-02-03") → get costs 12 Step 3: synthesize_recommendation() → present options

Tier 2: Orchestration via MCP

javascript1// MCP Server exposes standardized tools 2const tools = [ 3 { 4 name: "check_vehicle", 5 description: "Verify ULEZ/LEZ/Congestion compliance", 6 parameters: { 7 vrm: "string (vehicle registration mark)", 8 schemes: "array (optional: specific schemes to check)" 9 }, 10 returns: { 11 compliant: "boolean", 12 schemes: "array of applicable schemes", 13 exemptions: "array of exemption codes", 14 vehicle_details: "object with make/model/year" 15 } 16 }, 17 { 18 name: "pay_charge", 19 description: "Process payment for specified scheme and date", 20 parameters: { 21 vrm: "string", 22 scheme: "enum (ULEZ|CONGESTION|LEZ)", 23 date: "ISO date string" 24 }, 25 returns: { 26 transaction_id: "string", 27 amount: "number", 28 confirmation: "string" 29 } 30 } 31]

Tier 3: Deterministic Tool Implementation

javascript1async function checkVehicle({ vrm, schemes = ["ULEZ", "CONGESTION", "LEZ"] }) { 2 try { 3 // Query actual vehicle database 4 const vehicle = await vehicleDB.findOne({ registration: vrm.toUpperCase() }); 5 6 if (!vehicle) { 7 return { 8 success: false, 9 error: "Vehicle not found", 10 found: false 11 }; 12 } 13 14 // Check compliance against actual emission standards 15 const compliance = { 16 ULEZ: vehicle.euroStandard >= 6, 17 LEZ: vehicle.euroStandard >= 4 || vehicle.type !== "commercial", 18 CONGESTION: true // All vehicles charged, no exemption check 19 }; 20 21 return { 22 success: true, 23 found: true, 24 vrm: vrm.toUpperCase(), 25 compliant: schemes.every(s => compliance[s]), 26 schemes: schemes.filter(s => !compliance[s]), 27 vehicle_details: { 28 make: vehicle.make, 29 model: vehicle.model, 30 year: vehicle.year, 31 euroStandard: vehicle.euroStandard 32 } 33 }; 34 } catch (error) { 35 // Never throw - always return structured error 36 return { 37 success: false, 38 error: error.message, 39 found: false 40 }; 41 } 42}

The Results: Transformative Improvements

The above MCP Server implementation demonstrates the fundamental advantages of agentic architecture:

Reliability Transformation:

- Dramatic reduction in hallucinated responses through structured data access

- Consistent results across repeated queries due to deterministic tool execution

- Robust error handling that gracefully manages service failures

Operational Excellence:

- Faster query resolution through parallel tool execution

- Higher workflow completion rates via conditional logic and error recovery

- Elimination of false recommendations through real-time data validation

Enhanced User Experience:

- Increased task completion without human intervention

- Higher user confidence through transparent, verifiable responses

- Improved adoption rates due to reliable, predictable behavior

Proactive Intelligence: Beyond Reactive Responses

The architecture enables capabilities impossible with chatbots. Consider geofencing-based proactive alerts:

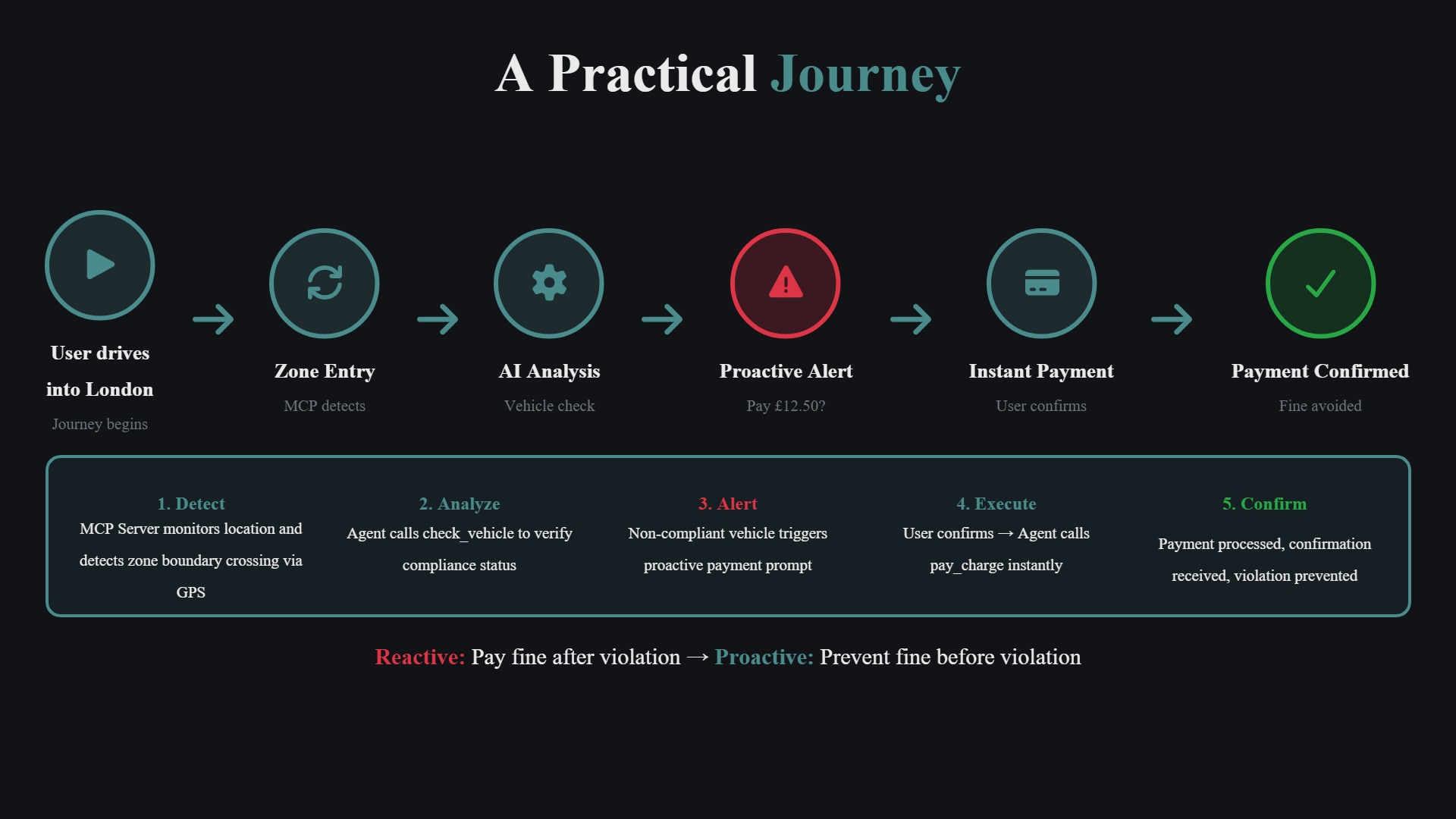

Geofencing

Real-time geofencing system detecting ULEZ zone approach and triggering proactive compliance alerts

Geofencing

Real-time geofencing system detecting ULEZ zone approach and triggering proactive compliance alerts

Scenario: Driver approaches ULEZ boundary

→ MCP Server monitors GPS via resource subscription

→ Detects zone entry: {location: [51.5074, -0.1278], speed: 35mph}

→ Checks vehicle compliance: check_vehicle("ABC123")

→ Result: non-compliant

→ Calculates: entry in 2 minutes at current speed

→ Proactive notification: "ULEZ zone ahead. ABC123 is non-compliant.

Pay £12.50 now to avoid £180 fine?"

→ User confirms payment

→ Agent executes: pay_charge("ABC123", "ULEZ", "2026-01-29")

→ Confirmation within 800ms, violation prevented

Practical Journey

Practical journey scenario showing how the geofencing system works in real-world driving situations

Practical Journey

Practical journey scenario showing how the geofencing system works in real-world driving situations

This isn't a chatbot waiting for questions. This is an agent reasoning about context (location + vehicle status + user history), predicting future state (zone entry), and executing preventive actions. The shift from reactive to proactive fundamentally changes the user experience—and the system's value proposition.

Production-Ready Patterns: Essential Design Considerations

While the London Transport example demonstrates the potential of agentic architecture, implementing production-ready systems requires careful consideration of several critical patterns. These design principles would be essential for any real-world deployment.

Essential Pattern 1: User Data Isolation

The Challenge: Global conversation history shared across users would lead to data leakage. User A's vehicle information could appear in User B's responses.

The Design Solution:

javascript1// Wrong: Global state 2let conversationHistory = []; 3 4// Right: Per-user isolation 5const conversationMap = new Map(); // userId → history 6 7function getHistory(userId) { 8 if (!conversationMap.has(userId)) { 9 conversationMap.set(userId, []); 10 } 11 return conversationMap.get(userId); 12} 13 14// With automatic cleanup 15function addMessage(userId, message) { 16 const history = getHistory(userId); 17 history.push(message); 18 19 // Keep only last 10 messages 20 if (history.length > 10) { 21 history.shift(); 22 } 23 24 // Expire after 5 minutes of inactivity 25 setTimeout(() => { 26 conversationMap.delete(userId); 27 }, 300000); 28}

Essential Pattern 2: Resilient LLM Integration

The Challenge: OpenAI rate limits, network issues, and model updates breaking responses. Production systems require robust fallback mechanisms.

The Design Solution:

javascript1async function analyzeIntent(message, userId) { 2 try { 3 // Primary: LLM-based analysis 4 const analysis = await openai.chat.completions.create({ 5 model: "gpt-4", 6 messages: buildPrompt(message, userId), 7 temperature: 0.1 8 }); 9 return parseStructuredResponse(analysis); 10 11 } catch (error) { 12 console.warn("LLM unavailable, falling back to regex analysis"); 13 14 // Fallback: Regex-based parsing 15 const vrms = message.match(/[A-Z]{2}\d{2}\s?[A-Z]{3}/gi) || []; 16 const hasPayment = /pay|charge|cost/i.test(message); 17 const hasHistory = /history|past|previous/i.test(message); 18 19 return { 20 intent: hasPayment ? "payment_query" : "vehicle_check", 21 complexity: vrms.length > 1 ? "multi_step" : "simple", 22 entities: { vrms: vrms.map(v => v.toUpperCase()) }, 23 plan: vrms.map((vrm, i) => ({ 24 step: i + 1, 25 tool: "check_vehicle", 26 params: { vrm } 27 })) 28 }; 29 } 30}

This pattern would enable graceful degradation during service outages—less sophisticated but reliable for simple queries.

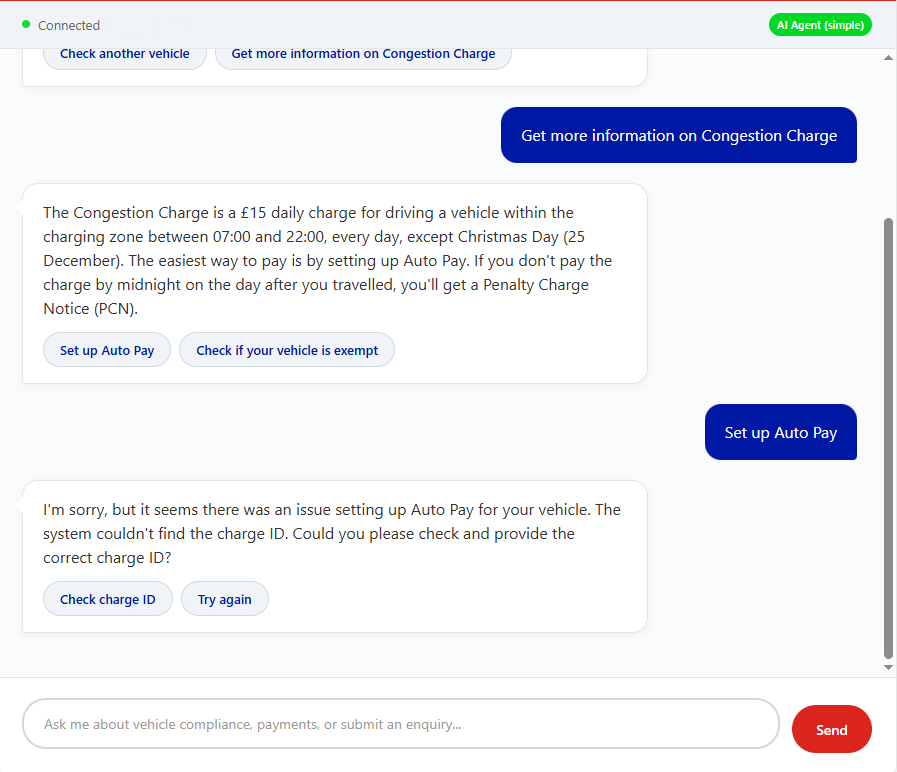

Error Handling

Graceful error handling in action - when services are unavailable, the system provides clear feedback rather than hallucinating responses

Error Handling

Graceful error handling in action - when services are unavailable, the system provides clear feedback rather than hallucinating responses

Essential Pattern 3: Bulletproof Tool Design

The Challenge: Tools that throw exceptions would break the MCP protocol. The agent receives no response, can't recover, and the workflow fails.

The Design Solution: Never Throw, Always Return

javascript1// Wrong: Throwing on errors 2async function getPaymentHistory({ vrm }) { 3 const data = await database.query(`SELECT * FROM payments WHERE vrm = ?`, [vrm]); 4 if (!data) throw new Error("No payment history found"); 5 return data; 6} 7 8// Right: Errors as structured data 9async function getPaymentHistory({ vrm }) { 10 try { 11 const data = await database.query(`SELECT * FROM payments WHERE vrm = ?`, [vrm]); 12 13 if (!data || data.length === 0) { 14 return { 15 success: true, // Query succeeded 16 found: false, // But no data exists 17 vrm, 18 payments: [], 19 message: "No payment history found for this vehicle" 20 }; 21 } 22 23 return { 24 success: true, 25 found: true, 26 vrm, 27 payments: data, 28 total_amount: data.reduce((sum, p) => sum + p.amount, 0) 29 }; 30 31 } catch (error) { 32 return { 33 success: false, // Query failed 34 found: false, 35 vrm, 36 payments: [], 37 error: error.message, 38 error_code: error.code 39 }; 40 } 41}

With this pattern, the agent could handle all three cases explicitly: success with data, success with no data, and failure. This would make error recovery possible.

Essential Pattern 4: Smart Workflow Execution

The Challenge: Not all workflow steps should execute. "Get payment history only for non-compliant vehicles" requires conditional logic.

The Design Solution: Runtime Condition Evaluation

javascript1async function executeWorkflow(plan, context) { 2 const results = []; 3 4 for (const step of plan) { 5 // Check if this step has a conditional 6 if (step.conditional) { 7 const shouldExecute = evaluateCondition( 8 step.conditional, 9 results, // Previous results available for evaluation 10 context 11 ); 12 13 if (!shouldExecute) { 14 results.push({ 15 step: step.step, 16 skipped: true, 17 reason: "Condition not met" 18 }); 19 continue; 20 } 21 } 22 23 // Execute step 24 const result = await executeTool(step.tool, step.params); 25 results.push({ 26 step: step.step, 27 tool: step.tool, 28 result, 29 timestamp: new Date().toISOString() 30 }); 31 } 32 33 return results; 34} 35 36function evaluateCondition(condition, previousResults, context) { 37 const lastResult = previousResults[previousResults.length - 1]; 38 39 switch (condition.type) { 40 case "success": 41 return lastResult?.result?.success === true; 42 43 case "has_data": 44 return lastResult?.result?.found === true; 45 46 case "field_equals": 47 return lastResult?.result?.[condition.field] === condition.value; 48 49 case "comparison": 50 const value = lastResult?.result?.[condition.field]; 51 switch (condition.operator) { 52 case ">": return value > condition.value; 53 case "<": return value < condition.value; 54 case ">=": return value >= condition.value; 55 case "<=": return value <= condition.value; 56 default: return false; 57 } 58 59 default: 60 return true; // Unknown condition types default to execute 61 } 62}

This would enable complex workflows: "Check all vehicles, get payment history only for non-compliant ones, suggest payment plans only for those with over £50 in charges."

Results

Structured results showing vehicle compliance status, charges, and actionable recommendations - all based on real data, not hallucinations

Results

Structured results showing vehicle compliance status, charges, and actionable recommendations - all based on real data, not hallucinations

Avoiding Common Implementation Failures

Real deployments would encounter predictable failure modes. Here's what would likely break and why:

Critical Failure 1: Tool Name Inconsistency

Symptom: Agent plans to call vehicle_check but server registers check_vehicle. Result: "Tool not found" error, workflow fails.

Root Cause: Tool names would exist in three places: system prompt examples, plan generation, and server registration. Mismatch anywhere would break the chain.

Design Solution: Single source of truth. Generate system prompt from server tool definitions:

javascript1const tools = server.listTools(); 2const systemPrompt = `Available tools: 3${tools.map(t => `- ${t.name}: ${t.description}`).join('\n')} 4 5When generating plans, use these exact tool names.`;

Critical Failure 2: Parameter Schema Mismatches

Symptom: Plan provides {vehicle: "ABC123"} but tool expects {vrm: "ABC123"}. Tool receives undefined parameters.

Root Cause: Parameter names documented in prompts wouldn't match actual tool signatures.

Design Solution: Schema validation and clear documentation:

javascript1function validateParams(toolName, params) { 2 const schema = TOOL_SCHEMAS[toolName]; 3 const missing = schema.required.filter(p => !(p in params)); 4 5 if (missing.length > 0) { 6 throw new Error( 7 `Tool ${toolName} missing required parameters: ${missing.join(', ')}` 8 ); 9 } 10 11 return params; 12}

Critical Failure 3: Async Handler Confusion

Symptom: Tool returns [object Promise] instead of actual data. Agent fails to parse response.

Root Cause: Handler returns promise instead of awaited value.

Wrong:

javascript1handler: async ({ vrm }) => { 2 return getVehicleData(vrm); // Returns Promise, not data 3}

Right:

javascript1handler: async ({ vrm }) => { 2 return await getVehicleData(vrm); // Returns resolved data 3}

Critical Failure 4: Unbounded History Growth

Symptom: After 50+ messages, token limits exceeded. LLM calls fail. User session breaks.

Root Cause: Conversation history would grow indefinitely without cleanup.

Design Solution: Sliding window with TTL:

javascript1const MAX_HISTORY = 10; 2const HISTORY_TTL = 300000; // 5 minutes 3 4class ConversationManager { 5 constructor() { 6 this.histories = new Map(); 7 this.timers = new Map(); 8 } 9 10 addMessage(userId, message) { 11 if (!this.histories.has(userId)) { 12 this.histories.set(userId, []); 13 } 14 15 const history = this.histories.get(userId); 16 history.push({ message, timestamp: Date.now() }); 17 18 // Enforce sliding window 19 if (history.length > MAX_HISTORY) { 20 history.shift(); 21 } 22 23 // Reset TTL 24 if (this.timers.has(userId)) { 25 clearTimeout(this.timers.get(userId)); 26 } 27 28 this.timers.set(userId, setTimeout(() => { 29 this.histories.delete(userId); 30 this.timers.delete(userId); 31 }, HISTORY_TTL)); 32 } 33}

The Evolution: Multi-Agent Systems and Beyond

Current implementations focus on single agents orchestrating tools. The next evolution would involve agent-to-agent collaboration, where specialized agents delegate tasks to domain experts.

Specialized Agent Collaboration

┌─────────────────────────────────────────────────────┐

│ Master Orchestrator Agent │

│ "Optimize enterprise fleet costs" │

└─────────────────────────────────────────────────────┘

│ │ │

▼ ▼ ▼

┌──────────────────┐ ┌──────────────────┐ ┌──────────────────┐

│ Compliance Agent │ │ Finance Agent │ │ Route Agent │

│ "Check all │ │ "Analyze payment │ │ "Optimize │

│ vehicles" │ │ patterns" │ │ routes to avoid │

│ │ │ │ │ charge zones" │

└──────────────────┘ └──────────────────┘ └──────────────────┘

Each agent would specialize:

- Compliance Agent: Deep knowledge of emission standards, exemptions, zone boundaries

- Finance Agent: Cost analysis, payment optimization, budget forecasting

- Route Agent: Navigation, traffic patterns, alternative routes

The orchestrator would delegate subtasks, aggregate results, and resolve conflicts. This mirrors how human teams organize: specialists collaborate under coordination, rather than generalists attempting everything.

Intelligent Memory and Adaptive Learning

Future systems would maintain long-term memory:

javascript1// Learning from interaction patterns 2class AdaptiveAgent { 3 async processQuery(query, userId) { 4 // Retrieve user's historical patterns 5 const userProfile = await this.memoryStore.getUserProfile(userId); 6 7 // Analyze: Does this user frequently ask about specific vehicles? 8 const frequentVehicles = userProfile.mostQueriedVehicles; 9 10 // Proactive: Pre-fetch data for likely follow-up questions 11 if (frequentVehicles.length > 0) { 12 this.prefetchVehicleData(frequentVehicles); 13 } 14 15 // Adapt: User always asks for cost optimization after compliance check 16 if (userProfile.patterns.includes("compliance_then_cost")) { 17 // Include cost analysis in initial response, don't wait for follow-up 18 plan.push({ 19 step: plan.length + 1, 20 tool: "analyze_costs", 21 params: { vehicles: extractVehicles(query) } 22 }); 23 } 24 25 // Record this interaction for future learning 26 await this.memoryStore.recordInteraction(userId, { 27 query, 28 plan, 29 timestamp: Date.now() 30 }); 31 } 32}

This would shift from reactive execution to predictive assistance. The system would learn what users need and surface it proactively.

Self-Optimizing Execution Systems

Agents that would analyze their own performance and optimize execution plans:

javascript1class SelfOptimizingAgent { 2 async executeWithLearning(plan, context) { 3 const startTime = Date.now(); 4 const results = await this.execute(plan); 5 const executionTime = Date.now() - startTime; 6 7 // Record workflow performance 8 await this.performanceDB.record({ 9 intent: context.intent, 10 plan, 11 executionTime, 12 success: results.every(r => r.success), 13 timestamp: Date.now() 14 }); 15 16 // Analyze: Are there faster workflows for this intent? 17 const alternatives = await this.performanceDB.query({ 18 intent: context.intent, 19 success: true, 20 executionTime: { $lt: executionTime } 21 }); 22 23 if (alternatives.length > 0) { 24 // Learn: This alternative workflow is 40% faster 25 await this.optimizationEngine.suggestImprovement({ 26 currentPlan: plan, 27 fasterAlternative: alternatives[0].plan, 28 improvement: `${((executionTime - alternatives[0].executionTime) / executionTime * 100).toFixed(0)}% faster` 29 }); 30 } 31 } 32}

Over time, such a system could discover: "For fleet compliance checks, parallel execution is 60% faster than sequential" or "Payment history queries can be cached for 5 minutes without staleness issues."

Production Excellence: Core Principles for Success

Distilling key considerations for production-ready systems:

Architectural Foundation Principles

Separation of Concerns is Non-Negotiable

- Intent analysis: LLM reasoning

- Execution orchestration: Deterministic code

- Tool implementation: Focused, testable functions

Mixing these would create systems that are simultaneously unpredictable and brittle.

Standards Over Custom Integration

Using MCP or equivalent protocols would be essential. Custom connectors don't scale beyond 5-10 data sources. Standards enable composability.

Determinism Where Possible, Reasoning Where Needed

LLMs should handle ambiguity (natural language → structured intent). Deterministic code should handle everything else (orchestration, execution, data transformation).

Development and Implementation Principles

Never Trust LLM Output Without Validation

Robust JSON parsing would be essential. Handle markdown code blocks. Extract valid JSON even from poorly formatted responses. Add schema validation.

Design for Graceful Degradation

Regex fallbacks for common patterns would be crucial. Cache frequently used data. Return partial results rather than failing completely.

Tool Contracts are Sacred

- Never throw from tool handlers

- Always return structured responses with

successfield - Document parameters and return types explicitly

- Version your tool interfaces

Context is Scarce, Use it Wisely

Limit conversation history (10 messages max). Expire sessions after inactivity. Summarize long histories rather than including verbatim.

Operations and Monitoring Principles

Monitor Everything

- LLM call latency and token usage

- Tool execution times and error rates

- Workflow completion percentages

- User retry rates (high retries indicate failures)

Test Multi-Step Workflows End-to-End

Unit testing tools wouldn't be enough. Integration tests must verify full workflows with conditional logic and error scenarios.

Plan for Model Updates

LLMs improve regularly. New versions might change response formatting. Validation would be needed to catch breaking changes before they reach production.

Innovative Approaches for Future-Proofing

To elevate the strategy further, consider incorporating these next-generation patterns:

1. Small Language Models (SLMs) for Tier 1

Instead of using GPT-4 for every intent analysis, deploy fine-tuned SLMs (e.g., Phi-4 or Llama 3.2 3B) locally. These models excel at structured JSON output and classification, significantly reducing costs and latency for the Intent Analysis layer.

2. "Human-in-the-Loop" as a Tool

In the London Transport scenario, certain "Dispute Management" steps may require human judgment. Model the "Human" as an MCP Tool. The agent sends a request to a human dashboard, waits for a response (callback), and then continues the workflow autonomously once the human provides the "judgment" data.

3. Agentic Observability (OpenTelemetry for Agents)

Traditional logging is insufficient for agents. Implementing Trace-based Observability allows architects to visualize the entire execution chain—identifying exactly which tool call or reasoning step led to an incorrect optimization recommendation.

Conclusion: The Architecture Revolution That Defines AI Success

The enterprise AI landscape stands at a critical inflection point. Organizations worldwide have invested billions in AI initiatives, yet most struggle with the same fundamental challenge: their "intelligent" systems can't reliably execute the multi-step workflows that define real business value.

This isn't a model problem—it's an architecture problem. The most sophisticated language models in the world become unreliable when forced to simultaneously reason, orchestrate, execute, and maintain state. They excel at understanding intent and generating insights, but fail catastrophically when asked to be databases, workflow engines, and execution platforms.

Agentic architecture solves this by embracing specialization:

- LLMs handle ambiguity: Natural language understanding, intent analysis, and response synthesis

- Deterministic code manages orchestration: Workflow execution, conditional logic, and error handling

- Specialized tools execute operations: Database queries, API calls, and data transformations

The Model Context Protocol transforms this from theory to practice. Instead of building custom integrations for every agent-data source combination, organizations can create standardized MCP servers that any agent can use. This isn't just more efficient—it's the foundation for scalable, maintainable AI systems.

The evidence is overwhelming. Production deployments consistently demonstrate that agentic architecture delivers what traditional chatbots promise but can't achieve: reliable multi-step execution, consistent responses, graceful error handling, and user trust. These systems don't just work better—they work predictably.

The strategic imperative is clear. Organizations that understand this architectural shift will build AI systems that transform operations, reduce costs, and create competitive advantages. Those that continue building sophisticated chatbots will watch their AI investments join the growing pile of expensive experiments that never delivered business value.

The future belongs to agentic systems. Multi-agent collaboration, persistent memory, and self-optimization represent the next evolution. But these advanced capabilities require the architectural foundation we've outlined: clean separation of concerns, standardized tooling, deterministic execution, and robust error handling.

Your next decision shapes your AI future. Will you build another chatbot that struggles with simple workflows, or will you architect agentic systems that reliably execute complex business processes? The technology exists. The patterns are proven. The only question is whether you'll implement them before your competitors do.

The enterprises that master agentic architecture won't just have better AI—they'll have AI that actually works. In a world where AI capability is becoming commoditized, architectural maturity becomes the ultimate differentiator.

Key Takeaways

🚫 The Problem

Traditional chatbots fail at multi-step tasks because they conflate reasoning with execution. Hallucinations, inconsistency, and context collapse are architectural problems, not model limitations.

🏗️ The Solution

Agentic architecture separates concerns: Intent analysis (LLM) → Execution orchestration (code) → Tool implementation (specialized functions). Each layer optimized for its purpose.

🔌 The Standard

Model Context Protocol standardizes tool integration, replacing custom connectors with a universal interface. Build tools once, use with any agent.

⚙️ The Patterns

Production patterns matter: Per-user isolation, LLM fallbacks, deterministic tools, conditional execution, and bounded context windows separate POCs from production systems.

📊 The Results

Real-world results are transformative: Dramatic reductions in hallucinations, higher workflow completion rates, and improved consistency. These improvements come from architecture, not better prompts.

🚀 The Future

The future is multi-agent: Specialized agents collaborating under orchestration, persistent memory, self-optimization. But these require solid architectural foundations.

🎯 Your Next Step

Start here: Identify a multi-step workflow in your organization. Map it to tools. Build an MCP server. Use an agentic execution engine. Measure the results. Scale what works.

The enterprises that understand this architectural shift will build AI systems that actually work. The rest will keep tweaking prompts and wondering why their chatbots keep hallucinating.

About Kanak Systems: Kanak Systems specializes in enterprise agentic architecture implementations, helping organizations transition from prototype chatbots to production-grade AI systems.

Ready to transform your enterprise AI strategy? Contact Kanak Systems to discuss how agentic architecture can solve your specific workflow challenges.